There is plenty of room for growth in the field of hockey analytics. In particular, machine learning algorithms and deep learning methods which have become popular in a large variety of fields are mysteriously sparse. Machine learning has been used to solve classification and prediction problems in science and finance to great effect. There is a great deal of potential for innovation by applying these methods to the data available to us in sports like hockey, where much has yet to be learned.

I don’t profess to be a machine learning expert. I am, in fact, a student. I treat my NHL database as a sandbox in which to test new theories and methods. Most recently, I’ve become interested in a branch of machine learning called swarm intelligence. More specifically, a method thereof called Particle Swarm Optimization.

Particle Swarm Optimization (PSO) is modelled after behaviour observed in animal or insect colonies such as ants, bees, or fish. Swarm intelligence seeks to create some collective, global intelligence from a group of simple agents interacting with each other and their environment according to basic rules. In much the same way that a colony of termites can build complex structures that no individual in its midst could possibly conceive of, a swarm intelligence algorithm can perform tasks whose complexities far exceed the capabilities of one of its agents.

Let’s be honest, though. “Swarm intelligence” is mainly a buzzword. While its name may conjure images of bees buzzing around one’s computer, particle swarm optimization is really just a fancy optimization algorithm.

Sorry to disappoint you.

That’s not to say PSO can’t provide a powerful and versatile alternative to traditional optimization procedures like gradient descent. It certainly won’t deter me from investigating its uses in hockey analysis. As a proof of concept, I set out to develop a player rating system optimized by a swarm trained on real expert opinion. Before I get into that, though, I’ll discuss the basic principles governing the optimization algorithm.

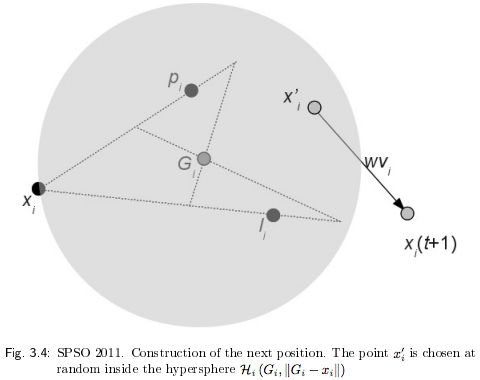

I used an implementation of the Standard Particle Swarm Optimization (SPSO) outlined by Maurice Clerc. In his informal description, Clerc describes a search space (which I prefer to call a solution space) through which a group of particles (the swarm) travels. For each point in the solution space a fitness can be computed, and a global optimum exists at the point where the best fitness is found.1”Best” depends on how fitness is evaluated. Typically, this is a minimum of the error function, as is the case here. The particles travel the solution space according to a set of simple rules and thereby converge on the global optimum.

In Clerc’s implementation, a particle need only know five items at each iteration:

After being initialized with random positions and velocities, the particles interact with one another and share information. Each agent’s velocity is reevaluated at each iteration according to information it has gathered from its own trajectory and the memory of other particles in its neighbourhood.

In more ways than one, this mechanism is not dissimilar to the process by which a bee colony might collectively locate the best place to build a hive. While a single bee can achieve the modest task of finding a good place to build a hive2I ask biologists to ignore for now the fact a single bee could not build a hive anyway. , it is far less likely to locate the best place without the help of other bees. Resisting convergence on a local optimum is a strength of PSO.

I was curious to find out if I could design a swarm capable of learning how to evaluate hockey players by following these crude guidelines. To achieve this, I randomly initialized 2,000 particles in a 390-dimensional solution space representing weights to be used in some player rating formula containing 40 statistical parameters. The swarm was given 300 iterations to find a global optimum – the point in the solution space corresponding with the weights that yield the best player ratings when applied to the formula.

In order to assess the quality of the ratings being produced in every iteration – that is, the fitness of every particle – I first had to collect input from real hockey experts. 21 experts participated in the survey process. Their backgrounds and roles in the hockey world ranged from scouting to journalism. Each expert was asked to select which of two players they believed to currently be superior in each of several hundred random head-to-head match-ups. 4,699 total answers were collected, with a consensus reached on 37% of the questions.

The error function to be used would render and rank active players by ratings according to weights corresponding with an agent’s position in the solution space, then evaluate the number of disagreements produced with the expert responses. Through swarm optimization, agents navigating the multidimensional solution space are expected to converge on a set of weights that yield the ratings which least displease the expert panel.

In a way, we’re teaching our population to collectively appeal to authority. Taking cues from their environment and one another, the particles avoid areas in the solution space (loosely interpretable in this analogy as opinions on the qualities of hockey players) that cause them to suffer derision at the hands of experts. It is not difficult to imagine that a colony of insects may behave in a similar manner to avoid places that are harmful to them. For example, a swarm may seek out the most shaded area in its surroundings as its members recoil from the harsh sun.

The parameters used to construct skater ratings include raw TOI, goal and assist totals, as well as various rate and team-relative stats over the last three seasons. Additionally, fraction of votes received for various trophies and awards including the Hart, Norris and Calder were used. Goaltender ratings were produced using a different array of statistics, including low, medium and high danger save percentage and goals saved above average. All parameters were normalized prior to the optimization suite.

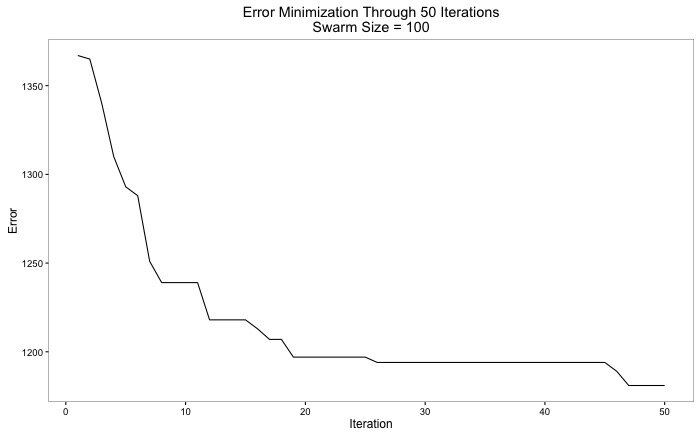

Below are illustrations of the error minimization and fitness distribution of a small swarm through 50 iterations:

The complete optimization reached an error of 1152, meaning the ratings produced by the swarm agreed with the expert panel at a rate just over 75%. For comparison, my own answers to the same survey questions produced an agreement rate of 78.4%.

While these rankings contain some oddities, they generally make intuitive sense. The swarm evidently undervalues top-end goalies, but when viewed separately the goalie and skater rankings are even more agreeable.

Interestingly, the swarm identified Sidney Crosby as the best player even though the only information supplied about him by the experts in the survey is that he is unanimously better than Matt Stajan. Similarly, Erik Karlsson is ranked as the best defenceman when he was only voted as superior to Devin Setoguchi and Nikita Nesterov.

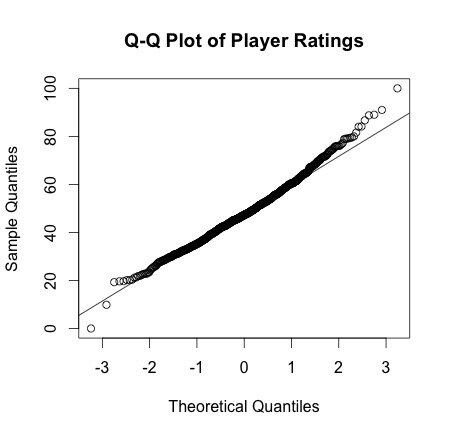

The player ratings are demonstrably not normally distributed, as shown below:

Ultimately, the goal here should be for the swarm to learn how to rate NHL players, not to produce the best possible ratings for this current sample of active players. It’s like teaching to the test. To ensure that the player rating formula extends to a generalized set of NHL players outside of those used to train the swarm, I applied the same formula to all players active between the 2007 and 2010 NHL seasons. Though there are players who belong to both sets, there is no overlap in the data used to produce the ratings.

I’m too young to remember this NHL era, but I take it as a good sign that I recognize at least several of the names at the top.

While an interesting exercise, these swarm-generated ratings are fundamentally limited in their usefulness. Because they are moulded by human opinion, they are also confined by our understanding of hockey. Hence, this type of analysis can, at best, rival the abilities of expert analysts at rating hockey players.

An interesting potential application of such methods is ranking draft-eligible players using statistics from various hockey leagues. Scouting lists and actual draft order can serve as archived expert input on which to train a swarm. It’s a sizeable project, but could potentially eliminate the need for scouts!

I’m only half kidding.

References

| 1. | ↑ | ”Best” depends on how fitness is evaluated. Typically, this is a minimum of the error function, as is the case here. |

| 2. | ↑ | I ask biologists to ignore for now the fact a single bee could not build a hive anyway. |